Reconfigure legacy systems to be routed through Iguana

Contents

Introduction

Your first challenge is to figure out the flow of those interfaces so that you can reconfigure them to be routed through Iguana. There are immediate advantages in doing this because you will be moving from a regime of very limited visibility, to much greater visibility. You’ll able to see:

- What interfaces are running.

- Clear logging of every transaction

- How much data is going through each interface.

- Set up email alerts etc. if the status of interface changes.

All through Iguana’s pleasant modern intuitive interface.

You’ll also be in a position to use Iguana to meet the demands that your boss wanted yesterday for changes to the existing functionality because you can use Iguana to alter the data flowing through without needing to immediately replace the existing infra-structure.

Just as importantly the advantage of this first step to being able to regression test your interfaces against new interfacing code you can write with Iguana.

Next we address some of the typical interfaces you may encounter, and how to insert Iguana into the pipeline: Point to Point (going from one port to another port), HL7 to Database (HL7 translated into SQL statements), and More complicated…

Point to Point [top]

Many HL7 interfaces are single direction going from point to point.

One system opens a TCP/IP connection to the second system and sends HL7 messages and receives ACK messages back which are merely ‘protocol acks’. That means to say “I got your data, you don’t need to send that message again.”.

There are variants on this scheme – there may be sensitivities on whether the receiving system expects the first system to maintain persistent connections etc. Fortunately these are all things which can be configured easy in Iguana.

So what you need to do is pick a time after hours when neither system is in use, pop a couple of Tylenol’s and carefully reconfigure these point to point interfaces to go through an Iguana instance.

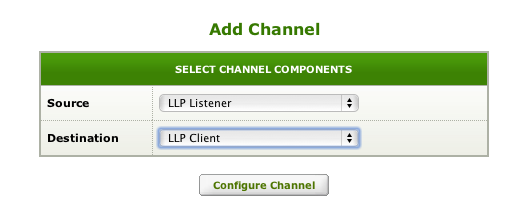

The principle is simple enough. You can set up one channel in iguana with an LLP Listener source and and LLP Client destination. The Iguana server should be on a machine with a static IP address or host name in your local area network. Something like this, login into the dashboard and click on the Add Channel button, to get this screen and select LLP Listener and LLP Client:

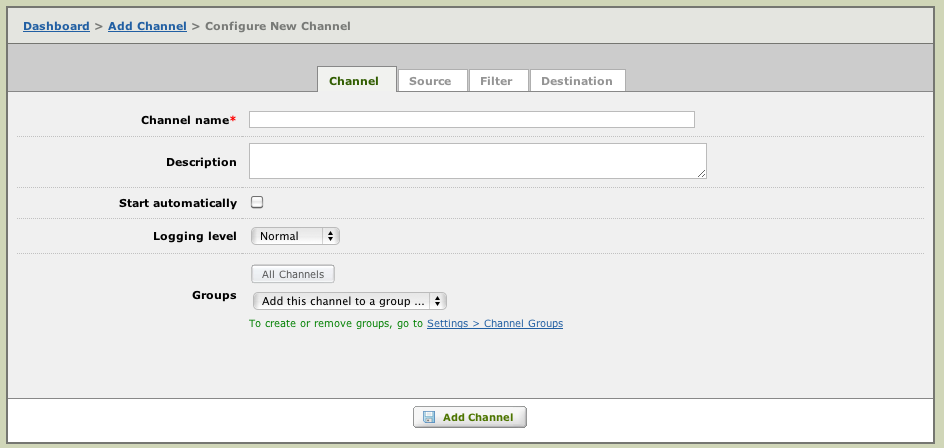

Click on Configure Channel and you should get a screen like:

From here you give a nice name for the channel and a description of what it does. If you click on the Source And Destination tabs you can configure the port that the channel will listen on and the host and port that it will store and forward data to. There are a host of configuration options you can use which are nicely described in the context sensitive help – just leave them as the defaults for now.

The LLP client should be configured to point to the host and receiving port of the destination system.

To get things working you need to reconfigure the source system to point at Iguana listening port and have it store and forward the data to the original destination system.

HL7 to Database [top]

This is a pretty common scenario for a vendor – you take HL7 in, put it through some business logic and at the end of the day map it out into SQL INSERTs and UPDATES against a database.

The SQL might be implicit – i.e. inside of a library that is part of the old technology or explicit where your legacy code is actually generating SQL strings.

Intercept the SQL

Where to start with this problem is to figure out a way of logging that SQL. This is always going to be possible in some way. Often if it is a scripting language like Javascript a helpful technique is to create a wrapper function around the library calls used to make SQL calls. In this way all the SQL generated per HL7 message can be recorded.

Log It

The idea here is to be able to log the SQL in a manner which is easy to relate back to the message that generated it. One trick is to create a database of this information or if the legacy environment makes that awkward then making files with the same information will work equally well. This table outlines the basic idea:

| Column Name | Description |

|---|---|

| MessageId | The unique message control ID of the message as found in the MSH segment. Or if that isn’t unique you can use an MD5 checksum as a method of generating a unique ID (use util.md5 in Iguana) |

| MessageData | The raw data of the message |

| SQL | List of SQL statements generated from the message. |

Then you are well on your way to having the pieces in place you need to make equivalent Lua code and regression test it against the legacy logic.

Write Equivalent Lua Code

Once you have your effective dataset of HL7 in and SQL out you have the perfect set up to write equivalent Lua code and regression test it.

Sometimes it doesn’t make sense to write exactly equivalent SQL code. There are tricks one can do to avoid the need to write slavishly equivalent code. One trick we have used with good success is to parse SQL INSERT statements so that the columns can be alphabetically sorted.

Once you do have your completed code a good technique for final validation is to regression test two pararel runs into two empty databases.

More complicated… [top]

Most of life comes under the heading of “More complicated…”

Perhaps just as well since if everything was very simple in technology there would be less people required to do it and we’d all be out of job. One interface I heard of in practice worked something like this:

- A file is FTPed to a certain location, at which point the VB code/listener would pick it up, move the file to an archive area, or to an error location if something was amiss.

- The program would then continue to break apart each and every segment of the message, re-assign certain hard-coded values into variables, then rebuild the newly-formatted HL7 message into a form that was acceptable to internal applications.

- The program then calls a SQL stored proc, with the formatted message as an input parameter, to write to SQL tables.

- Internal application/Lab Information System is triggered to consume the data.

So how do you go about inserting Iguana into that work flow without breaking it?

Step 1

Where I would start with this problem is to intercept the messages at the first point:

- Reconfigure the VB program if possible to pick up the files from a new area.

- Configure Iguana to pick up those files enqueue then in it’s own logging system.

- Have Iguana drop the files off where the the VB program can pick them up.

If the VB program is not configurable and you lack the source code to it then an alternative solution would be to reconfigure the FTP server to drop the files into a different physical area and have Iguana pick the files up from there and drop them into the location the VB program is hard coded to watch.

If you wanted to you could even have Iguana look at the error directory to see which messages were rejected. See File and FTP Interfaces for more information.

Step 2

Hopefully you can access the code to the stored procedure and tweak it so that the raw string of the input HL7 message can be obtained. Or you could rename that stored proc and make a new one with the old name to do the logging you need to do and invoke the old implementation.

If you can modify that procedure so that it picks out the message control ID of each inbound message and inserts the data into a table something like:

| Column Name | Description |

|---|---|

| MessageId | The unique message control ID of the message as found in the MSH segment. Or if that isn’t unique you can use an MD5 checksum as a method of generating a unique ID (use util.md5 in Iguana) |

| MessageData | The raw data of the message |

Then you are well on your way to having the pieces in place you need to make equivalent Lua code and regression test it against the legacy logic.

Tracing the data into the SQL tables is going to be tricky. If you can make an empty test database it with the same structure it might be possible to get a clearer idea how the data is flowing from the input message into the database tables.

Step 3

Once you get some more visibility on the problem it may become clearer whether the whole thing can be replaced in one go or whether a more conservative strategy of just replacing one part of the pipeline is more workable. With Iguana as part of the pipeline if you need to make immediate changes then you can which may take some time pressure off to resolve the over all problem.