One of our partners had an interesting performance issue which they asked us to help with. The problem was that they have a farm of Iguana servers feeding into another application that uses IIS to receive the messages via HTTP.

Each channel was sending messages individually through to IIS via net.http.post. The overall performance wasn’t what was required, roughly 200 m/s per message. For some reason IIS was not allowing persistent HTTP connections and so for each message there was the overhead of opening up a TCP/IP socket connection, including the HTTP header data, and then tearing down the TCP/IP connection.

That’s a lot of overhead per message.

There is also a lot of potential for other performance killing interactions to happen with 50 channels independently sending data to IIS. Some of this could probably be sorted out by re-configuring IIS, although I am not an IIS expert. Typically webservers can do things like throttle frequent requests which can look like a denial of service, they also may not accept a lot of concurrent connections, and there may be contention between threads within the IIS server etc.

Some of these problems could be resolved by choices in configuring IIS. But rather going through that effort lets think a little harder about the core problem. What is the actual problem?

What is the fastest way to get the maximum number of messages/second from Iguana to the end application?

So here’s a different route to solve the end problem.

Instead of having 50 Iguana channels pushing willy nilly into IIS, let’s turn the problem on it’s head:

- Set up a bank of LLP -> Filter -> To Channel channels.

- The Filter can do filtering and transformation of the data into say JSON.

- Then have the external application poll Iguana using the RESTful api to query the logs of Iguana.

- The external application queries all the messages in one second intervals from Iguana.

This is vastly more efficient with far less complexity:

- We only have the overhead for setting up one HTTP request and response across the wire but we can get literally thousands of messages.

- This approach maxes out the efficiency of gzip compression for the HTTP transaction so we send a lot less bytes over the wire.

- We can do this process with a single thread on the application side so there is far less contention.

- There are also less threads on the Iguana side.

I put together this little script which gives an idea of the types of speeds possible with this approach:

function main()

local Now = os.ts.time()

local Count = 0

for i = 1,10 do

Count = Count + QueryMessageData(Now-100000, Now)

end

local Finish = os.ts.time()

print(Count)

print(Finish-Now)

print (Count/(Finish-Now))

end

function QueryMessageData(Start, Finish)

local After = os.ts.date("%Y/%m/%d %H:%M:%S", Start)

local Before = os.ts.date("%Y/%m/%d %H:%M:%S", Finish)

local X = net.http.get{url='http://localhost:6543/api_query',

parameters={

username='admin',

password='password',

after=After,

before=Before,

type = 'messages'

},live=true}

X = xml.parse{data=X}

return X.export:childCount('message')

end

Running on my mac pro laptop on battery power I got well over 12,000 messages per second. At this point the transport is not the bottleneck, the performance problem is simply going to be how to efficiently process all those messages.

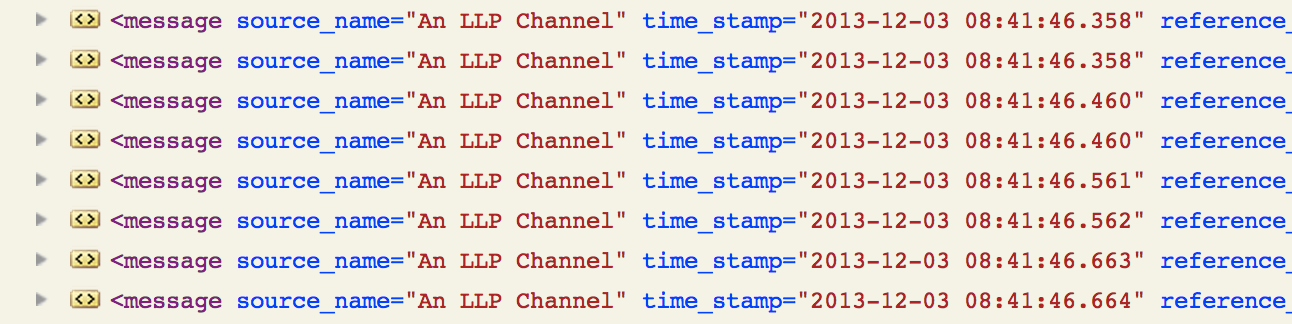

One very nice aspect about this solution is that the messages do come out in queued order, you get all the meta information, timestamps etc. too:

There are few other pieces required for the overall solution. You might want to have a means of resubmitting messages, that’s easy to achieve by building a little web application that can query an arbitrary range of data from a given channel and push it in much the same way.

If you have any questions about this tip do feel free to ask.